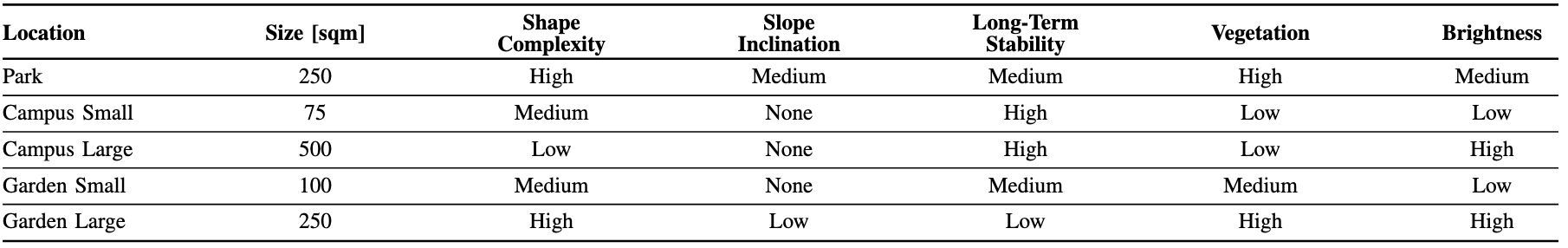

Dataset Overview

Locations

This dataset includes recordings from five distinct outdoor locations, captured at the campus of Esslingen University and in a private garden. Each environment was selected to present unique challenges for SLAM algorithm evaluation, offering diverse conditions in terms of layout, vegetation, lighting, and stability. By encompassing a broad range of real-world scenarios—from compact urban green spaces to expansive natural areas—the dataset supports comprehensive testing and development of SLAM systems, allowing researchers to evaluate and improve adaptability in varied settings.

Location Characteristics

Each environment was selected based on several important criteria, which help in evaluating the performance and robustness of SLAM systems. The following characteristics offer insight into how each location supports specific challenges and testing conditions:

-

Size: The overall area size influences the scope of navigation. Large areas allow for testing long operational times and path optimization, while smaller areas focus on precision in confined spaces.

-

Shape Complexity: Simple shapes (e.g., squares) are easier for robots to navigate, whereas more complex shapes introduce challenges with frequent turns and directional changes.

-

Slope Inclination: Varying terrain slopes can influence sensor data, particularly from cameras. Steep slopes may shift sensors’ fields of view upward or downward, affecting the range of detected features.

-

Long-Term Stability: Environmental stability over time affects the consistency of visual cues. Seasonal changes, growth of vegetation, or new obstacles may impact the reliability of location-based SLAM systems.

-

Vegetation: Trees, shrubs, and other vegetation provide natural landmarks but can introduce occlusions and unique navigation obstacles. Dense vegetation areas demand robust feature detection and tracking capabilities.

-

Brightness: Ambient lighting levels, from bright open spaces to shaded areas, affect visual perception systems. Dynamic lighting changes, such as shifting cloud cover, test a system’s ability to adapt to varying brightness levels.

Park

The Park location is a natural area with moderate slopes and varied vegetation, including trees and bushes. This setting provides both natural obstacles and landmarks, aiding navigation but also occasionally obstructing movement. The ambient brightness varies, adding to the challenge of dynamic environmental conditions for SLAM evaluations.

Campus Small

Campus Small is a compact lawn adjacent to a building, featuring minimal vegetation and a reflective glass surface that introduces visual localization challenges. The surrounding structures cast shadows, creating a low-brightness environment suited for controlled testing scenarios.

Campus Large

Situated on a rooftop, Campus Large offers an expansive area with minimal vegetation and an unobstructed horizon-like view. The stable brightness and few visual landmarks provide a distinct testing ground for navigation in open spaces with limited environmental cues.

Garden Small

Garden Small is a shaded garden area surrounded by buildings and a uniform wall on one side. It has moderate vegetation density and reduced brightness, making it challenging for consistent tracking due to limited visual variety.

Garden Large

The Garden Large location is a traditional garden with dense vegetation, low incline, and ample light. This visually rich setting enhances the difficulty of navigation and path-planning, with varied vegetation providing both complex landmarks and obstacles for localization.

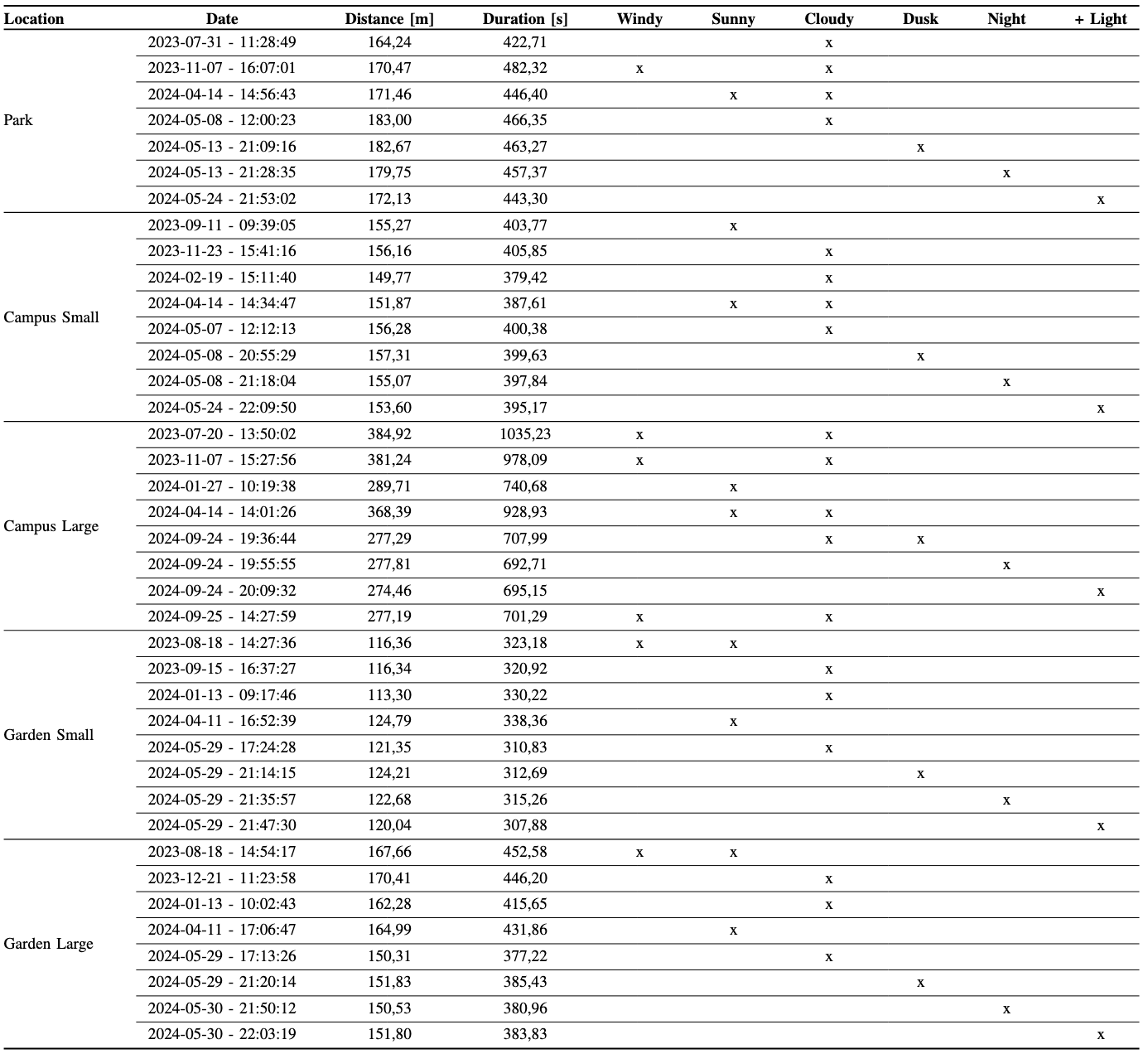

Recordings

The dataset captures the environmental variations of each location across different seasons and weather conditions, highlighting how factors such as vegetation, brightness, and lighting influence the robot’s performance. Each location was recorded in diverse weather conditions—including sunny, cloudy, and windy days—and at various times of day, from dusk to night, to assess sensor performance under varying light levels.

The table below provides a summary of the recordings and their associated environmental conditions, while the image above illustrates seasonal changes and different lighting conditions within the dataset. This variety allows researchers to evaluate SLAM systems in realistic outdoor scenarios, capturing the challenges presented by changing environmental factors.

To ensure consistency across recordings, we used a standardized route referred to as the Perimeter scenario. In this scenario, the robot follows the edge of the lawn area, completing three full rounds. Each round has slight trajectory variations and partial overlaps with the previous ones, creating realistic spatial coverage and enhancing the dataset with natural path variations. This approach enables the analysis of how environmental changes over time affect localization accuracy along a familiar path. It also supports loop-closing opportunities to maintain accurate long-term localization and promotes robust long-term mapping by capturing the same environment repeatedly under diverse conditions.

Note: A winter recording for the Park location is not included due to heavy slippage caused by the slope inclination. Additionally, the Campus Large Summer recording lacks PiCam data, while the Campus Large Dusk and Garden Small Winter recordings do not contain data from the D435i internal IMU.

Data Description

Raw Data Overview

The dataset contains raw data organized by sequence directories, with sensor-specific subdirectories for easy access. Each sensor’s data is stored in its respective format, with images, IMU data, and ground truth recordings structured as follows:

- Sequence Directory

- intelrealsense_D435i

- rgb

- timestamp.png

- depth

- timestamp.png

- imu

- imu.txt

- rgb.txt

- depth.txt

- rgb

- intelrealsense_T265

- cam_left

- timestamp.png

- cam_right

- timestamp.png

- imu

- imu.txt

- cam_left.txt

- cam_right.txt

- cam_left

- picam

- rgb

- timestamp.png

- rgb.txt

- rgb

- vn100

- imu.txt

- groundtruth.txt

- intelrealsense_D435i

- Calibration

- intelrealsense_D435i.yaml

- intelrealsense_T265.yaml

- picam.yaml

- vn100.yaml

Rosbag Generation

We provide scripts to convert raw data into ROS bag files, available here). Note that the IMU publishing rate for Intel RealSense D435i and T265 depends on the snychronization strategy of acceleromenter and gyrometer. The generated topics include the following data streams:

| Sensor | ROS Topic | ROS Message Type | Publish Rate [Hz] |

|---|---|---|---|

| Intel RealSense D435i | /d435i/rgb_image | sensor_msgs/Image | 30 |

| /d435i/depth_image | sensor_msgs/Image | 30 | |

| /d435i/imu | sensor_msgs/Imu | 300 | |

| Intel RealSense T265 | /t265/image_left | sensor_msgs/Image | 30 |

| /t265/image_right | sensor_msgs/Image | 30 | |

| /t265/imu | sensor_msgs/Imu | 265 | |

| PiCam | /pi_cam_02/rgb_image | sensor_msgs/Image | 30 |

| VN100 | /vn100/imu | sensor_msgs/Imu | 65 |